Amazon DAS-C01 dumps - 100% Pass Guarantee!

Vendor: Amazon

Certifications: Amazon Certifications

Exam Name: AWS Certified Data Analytics - Specialty (DAS-C01)

Exam Code: DAS-C01

Total Questions: 285 Q&As

Last Updated: Mar 13, 2025

Note: Product instant download. Please sign in and click My account to download your product.

- Q&As Identical to the VCE Product

- Windows, Mac, Linux, Mobile Phone

- Printable PDF without Watermark

- Instant Download Access

- Download Free PDF Demo

- Includes 365 Days of Free Updates

VCE

- Q&As Identical to the PDF Product

- Windows Only

- Simulates a Real Exam Environment

- Review Test History and Performance

- Instant Download Access

- Includes 365 Days of Free Updates

Amazon DAS-C01 Last Month Results

99.1% Pass Rate

99.1% Pass Rate 365 Days Free Update

365 Days Free Update Verified By Professional IT Experts

Verified By Professional IT Experts 24/7 Live Support

24/7 Live Support Instant Download PDF&VCE

Instant Download PDF&VCE 3 Days Preparation Before Test

3 Days Preparation Before Test 18 Years Experience

18 Years Experience 6000+ IT Exam Dumps

6000+ IT Exam Dumps 100% Safe Shopping Experience

100% Safe Shopping Experience

DAS-C01 Q&A's Detail

| Exam Code: | DAS-C01 |

| Total Questions: | 285 |

CertBus Has the Latest DAS-C01 Exam Dumps in Both PDF and VCE Format

- Amazon_certbus_DAS-C01_by_network_VPN_242.pdf

- 227.74 KB

- Amazon_certbus_DAS-C01_by_Manni_272.pdf

- 225.37 KB

- Amazon_certbus_DAS-C01_by_Manish_Kumar_276.pdf

- 225.68 KB

- Amazon_certbus_DAS-C01_by_maybeeccie_274.pdf

- 225.9 KB

- Amazon_certbus_DAS-C01_by_sanjay_kapur_268.pdf

- 225.68 KB

- Amazon_certbus_DAS-C01_by_BURN_CASH_-SRI_LANKA-_248.pdf

- 225.86 KB

DAS-C01 Online Practice Questions and Answers

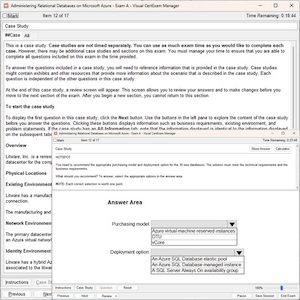

A company has a business unit uploading .csv files to an Amazon S3 bucket. The company's data platform team has set up an AWS Glue crawler to do discovery, and create tables and schemas. An AWS Glue job writes processed data from the created tables to an Amazon Redshift database. The AWS Glue job handles column mapping and creating the Amazon Redshift table appropriately. When the AWS Glue job is rerun for any reason in a day, duplicate records are introduced into the Amazon Redshift table.

Which solution will update the Redshift table without duplicates when jobs are rerun?

A. Modify the AWS Glue job to copy the rows into a staging table. Add SQL commands to replace the existing rows in the main table as postactions in the DynamicFrameWriter class.

B. Load the previously inserted data into a MySQL database in the AWS Glue job. Perform an upsert operation in MySQL, and copy the results to the Amazon Redshift table.

C. Use Apache Spark's DataFrame dropDuplicates() API to eliminate duplicates and then write the data to Amazon Redshift.

D. Use the AWS Glue ResolveChoice built-in transform to select the most recent value of the column.

A company wants to improve the data load time of a sales data dashboard. Data has been collected as .csv files and stored within an Amazon S3 bucket that is partitioned by date. The data is then loaded to an Amazon Redshift data warehouse for frequent analysis. The data volume is up to 500 GB per day.

Which solution will improve the data loading performance?

A. Compress .csv files and use an INSERT statement to ingest data into Amazon Redshift.

B. Split large .csv files, then use a COPY command to load data into Amazon Redshift.

C. Use Amazon Kinesis Data Firehose to ingest data into Amazon Redshift.

D. Load the .csv files in an unsorted key order and vacuum the table in Amazon Redshift.

An online retail company with millions of users around the globe wants to improve its ecommerce analytics capabilities. Currently, clickstream data is uploaded directly to Amazon S3 as compressed files. Several times each day, an application running on Amazon EC2 processes the data and makes search options and reports available for visualization by editors and marketers. The company wants to make website clicks and aggregated data available to editors and marketers in minutes to enable them to connect with users more effectively.

Which options will help meet these requirements in the MOST efficient way? (Choose two.)

A. Use Amazon Kinesis Data Firehose to upload compressed and batched clickstream records to Amazon OpenSearch Service (Amazon Elasticsearch Service).

B. Upload clickstream records to Amazon S3 as compressed files. Then use AWS Lambda to send data to Amazon OpenSearch Service (Amazon Elasticsearch Service) from Amazon S3.

C. Use Amazon OpenSearch Service (Amazon Elasticsearch Service) deployed on Amazon EC2 to aggregate, filter, and process the data. Refresh content performance dashboards in near-real time.

D. Use OpenSearch Dashboards (Kibana) to aggregate, filter, and visualize the data stored in Amazon OpenSearch Service (Amazon Elasticsearch Service). Refresh content performance dashboards in near-real time.

E. Upload clickstream records from Amazon S3 to Amazon Kinesis Data Streams and use a Kinesis Data Streams consumer to send records to Amazon OpenSearch Service (Amazon Elasticsearch Service).

A data analytics specialist is maintaining a company's on-premises Apache Hadoop environment. In this environment, the company uses Apache Spark jobs for data transformation and uses Apache Presto for on-demand queries. The Spark

jobs consist of many intermediate steps that require high-speed random I/O during processing. Some jobs can be restarted without losing the original data.

The data analytics specialist decides to migrate the workload to an Amazon EMR cluster. The data analytics specialist must implement a solution that will scale the cluster automatically.

Which solution will meet these requirements with the FASTEST I/O?

A. Use Hadoop Distributed File System (HDFS). Configure the EMR cluster as an instance fleet with custom automatic scaling.

B. Use EMR File System (EMRFS). Configure the EMR cluster as a uniform instance group with EMR managed scaling.

C. Use Hadoop Distributed File System (HDFS). Configure the EMR cluster as an instance group with custom automatic scaling.

D. Use EMR File System (EMRFS). Configure the EMR cluster as an instance fleet with custom automatic scaling.

A company has a fitness tracker application that generates data from subscribers. The company needs real-time reporting on this data. The data is sent immediately, and the processing latency must be less than 1 second. The company wants to perform anomaly detection on the data as the data is collected. The company also requires a solution that minimizes operational overhead.

Which solution meets these requirements?

A. Amazon EMR cluster with Apache Spark streaming, Spark SQL, and Spark's machine learning library (MLlib)

B. Amazon Kinesis Data Firehose with Amazon S3 and Amazon Athena

C. Amazon Kinesis Data Firehose with Amazon QuickSight

D. Amazon Kinesis Data Streams with Amazon Kinesis Data Analytics

Add Comments

Success Stories

- Lueilwitz

- Corkery

- Mar 22, 2025

- Rating: 5.0 / 5.0

I passed my DAS-C01 with this dumps so I want to share tips with you. Check the exam outline. You need to know which topics are required in the actual exam. Then you can make your plan targeted. Spend more time on that topic are much more harder than others. I got all same questions from this dumps. Some may changed slightly (sequence of the options for example). So be sure to read your questionscarefully. That’s the most important tip for all candidates.

- Turkey

- BAHMAN

- Mar 21, 2025

- Rating: 4.6 / 5.0

![]()

About 3 questions are different, but the remaining is ok for pass. I passed successfully.

- London

- Kelly

- Mar 19, 2025

- Rating: 5.0 / 5.0

This resource was colossally helpful during my DAS-C01 studies. The practice tests are decent, and the downloadable content was great. I used this and two other textbooks as my primary resources, and I passed! Thank you!

- Egypt

- Obed

- Mar 19, 2025

- Rating: 4.2 / 5.0

![]()

Nice study material, I passed the exam with the help of it. Recommend strongly.

- United States

- _q_

- Mar 18, 2025

- Rating: 4.9 / 5.0

![]()

Do not reply on a dumps to pass the exam.

Utilize GNS3 or real equipment to learn the technology.

Please do not degrade the value of this Cisco Cert.

- Morocco

- Zack

- Mar 17, 2025

- Rating: 4.8 / 5.0

![]()

I pass today . In my opinion,this dumps is enough to pass exam. Good luck to you.

- United States

- Alex

- Mar 16, 2025

- Rating: 4.6 / 5.0

![]()

This is latest Dumps and all the answers are accurate. You can trust on this. Recommend.

- United Kingdom

- Harold

- Mar 16, 2025

- Rating: 4.3 / 5.0

![]()

Dump valid! Only 3 new questions but they are easy.

- Russian Federation

- Karel

- Mar 14, 2025

- Rating: 4.9 / 5.0

![]()

passed the exam today. all the question from this dumps,so you can trust on it.

- Sault Au Mouton

- Robert

- Mar 14, 2025

- Rating: 5.0 / 5.0

I'm sure this dumps is valid. I check the reviews on the internet and finally choose their site. The dumps proved I made my decision correctly. I passed my exam and got a pretty nice result. I prepare for the 200-310 exam with the latest 400+Qs version. First, I spend about one week in reading the dumps. Then I check some questions on the net. This is enough for you if you just want to pass the exam. Register in a relevant course if you have enough time. Good luck!

Amazon DAS-C01 exam official information: This credential helps organizations identify and develop talent with critical skills for implementing cloud initiatives. Earning AWS Certified Data Analytics – Specialty validates expertise in using AWS data lakes and analytics services to get insights from data.

Printable PDF

Printable PDF