DAS-C01 Online Practice Questions and Answers

An Amazon Redshift database contains sensitive user data. Logging is necessary to meet compliance requirements. The logs must contain database authentication attempts, connections, and disconnections. The logs must also contain each query run against the database and record which database user ran each query.

Which steps will create the required logs?

A. Enable Amazon Redshift Enhanced VPC Routing. Enable VPC Flow Logs to monitor traffic.

B. Allow access to the Amazon Redshift database using AWS IAM only. Log access using AWS CloudTrail.

C. Enable audit logging for Amazon Redshift using the AWS Management Console or the AWS CLI.

D. Enable and download audit reports from AWS Artifact.

An online retailer needs to deploy a product sales reporting solution. The source data is exported from an external online transaction processing (OLTP) system for reporting. Roll-up data is calculated each day for the previous day's activities. The reporting system has the following requirements:

1.

Have the daily roll-up data readily available for 1 year.

2.

After 1 year, archive the daily roll-up data for occasional but immediate access.

3.

The source data exports stored in the reporting system must be retained for 5 years. Query access will be needed only for re-evaluation, which may occur within the first 90 days.

Which combination of actions will meet these requirements while keeping storage costs to a minimum? (Choose two.)

A. Store the source data initially in the Amazon S3 Standard-Infrequent Access (S3 Standard-IA) storage class. Apply a lifecycle configuration that changes the storage class to Amazon S3 Glacier Deep Archive 90 days after creation, and then deletes the data 5 years after creation.

B. Store the source data initially in the Amazon S3 Glacier storage class. Apply a lifecycle configuration that changes the storage class from Amazon S3 Glacier to Amazon S3 Glacier Deep Archive 90 days after creation, and then deletes the data 5 years after creation.

C. Store the daily roll-up data initially in the Amazon S3 Standard storage class. Apply a lifecycle configuration that changes the storage class to Amazon S3 Glacier Deep Archive 1 year after data creation.

D. Store the daily roll-up data initially in the Amazon S3 Standard storage class. Apply a lifecycle configuration that changes the storage class to Amazon S3 Standard-Infrequent Access (S3 Standard-IA) 1 year after data creation.

E. Store the daily roll-up data initially in the Amazon S3 Standard-Infrequent Access (S3 Standard-IA) storage class. Apply a lifecycle configuration that changes the storage class to Amazon S3 Glacier 1 year after data creation.

A retail company leverages Amazon Athena for ad-hoc queries against an AWS Glue Data Catalog. The data analytics team manages the data catalog and data access for the company. The data analytics team wants to separate queries and manage the cost of running those queries by different workloads and teams. Ideally, the data analysts want to group the queries run by different users within a team, store the query results in individual Amazon S3 buckets specific to each team, and enforce cost constraints on the queries run against the Data Catalog.

Which solution meets these requirements?

A. Create IAM groups and resource tags for each team within the company. Set up IAM policies that control user access and actions on the Data Catalog resources.

B. Create Athena resource groups for each team within the company and assign users to these groups. Add S3 bucket names and other query configurations to the properties list for the resource groups.

C. Create Athena workgroups for each team within the company. Set up IAM workgroup policies that control user access and actions on the workgroup resources.

D. Create Athena query groups for each team within the company and assign users to the groups.

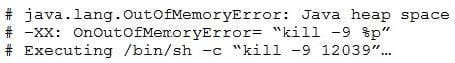

An operations team notices that a few AWS Glue jobs for a given ETL application are failing. The AWS Glue jobs read a large number of small JSON files from an Amazon S3 bucket and write the data to a different S3 bucket in Apache Parquet format with no major transformations. Upon initial investigation, a data engineer notices the following error message in the History tab on the AWS Glue console: "Command Failed with Exit Code 1."

Upon further investigation, the data engineer notices that the driver memory profile of the failed jobs crosses the safe threshold of 50% usage quickly and reaches 90?5% soon after. The average memory usage across all executors continues to be less than 4%.

The data engineer also notices the following error while examining the related Amazon CloudWatch Logs.

What should the data engineer do to solve the failure in the MOST cost-effective way?

A. Change the worker type from Standard to G.2X.

B. Modify the AWS Glue ETL code to use the `groupFiles': `inPartition' feature.

C. Increase the fetch size setting by using AWS Glue dynamics frame.

D. Modify maximum capacity to increase the total maximum data processing units (DPUs) used.

An online retail company uses Amazon Redshift to store historical sales transactions. The company is required to encrypt data at rest in the clusters to comply with the Payment Card Industry Data Security Standard (PCI DSS). A corporate governance policy mandates management of encryption keys using an on-premises hardware security module (HSM).

Which solution meets these requirements?

A. Create and manage encryption keys using AWS CloudHSM Classic. Launch an Amazon Redshift cluster in a VPC with the option to use CloudHSM Classic for key management.

B. Create a VPC and establish a VPN connection between the VPC and the on-premises network. Create an HSM connection and client certificate for the on-premises HSM. Launch a cluster in the VPC with the option to use the on-premises HSM to store keys.

C. Create an HSM connection and client certificate for the on-premises HSM. Enable HSM encryption on the existing unencrypted cluster by modifying the cluster. Connect to the VPC where the Amazon Redshift cluster resides from the on-premises network using a VPN.

D. Create a replica of the on-premises HSM in AWS CloudHSM. Launch a cluster in a VPC with the option to use CloudHSM to store keys.

A healthcare company ingests patient data from multiple data sources and stores it in an Amazon S3 staging bucket. An AWS Glue ETL job transforms the data, which is written to an S3-based data lake to be queried using Amazon Athena. The company wants to match patient records even when the records do not have a common unique identifier.

Which solution meets this requirement?

A. Use Amazon Macie pattern matching as part of the ETLjob

B. Train and use the AWS Glue PySpark filter class in the ETLjob

C. Partition tables and use the ETL job to partition the data on patient name

D. Train and use the AWS Glue FindMatches ML transform in the ETLjob

A social media company is using business intelligence tools to analyze its data for forecasting. The company is using Apache Kafka to ingest the low-velocity data in near-real time. The company wants to build dynamic dashboards with machine learning (ML) insights to forecast key business trends. The dashboards must provide hourly updates from data in Amazon S3. Various teams at the company want to view the dashboards by using Amazon QuickSight with ML insights. The solution also must correct the scalability problems that the company experiences when it uses its current architecture to ingest data.

Which solution will MOST cost-effectively meet these requirements?

A. Replace Kafka with Amazon Managed Streaming for Apache Kafka. Ingest the data by using AWS Lambda, and store the data in Amazon S3. Use QuickSight Standard edition to refresh the data in SPICE from Amazon S3 hourly and create a dynamic dashboard with forecasting and ML insights.

B. Replace Kafka with an Amazon Kinesis data stream. Use an Amazon Kinesis Data Firehose delivery stream to consume the data and store the data in Amazon S3. Use QuickSight Enterprise edition to refresh the data in SPICE from Amazon S3 hourly and create a dynamic dashboard with forecasting and ML insights.

C. Configure the Kafka-Kinesis-Connector to publish the data to an Amazon Kinesis Data Firehose delivery stream that is configured to store the data in Amazon S3. Use QuickSight Enterprise edition to refresh the data in SPICE from Amazon S3 hourly and create a dynamic dashboard with forecasting and ML insights.

D. Configure the Kafka-Kinesis-Connector to publish the data to an Amazon Kinesis Data Firehose delivery stream that is configured to store the data in Amazon S3. Configure an AWS Glue crawler to crawl the data. Use an Amazon Athena data source with QuickSight Standard edition to refresh the data in SPICE hourly and create a dynamic dashboard with forecasting and ML insights.

A manufacturing company is storing data from its operational systems in Amazon S3. The company's business analysts need to perform one-time queries of the data in Amazon S3 with Amazon Athena. The company needs to access the Athena network from the on-premises network by using a JDBC connection. The company has created a VPC Security policies mandate that requests to AWS services cannot traverse the Internet.

Which combination of steps should a data analytics specialist take to meet these requirements? (Choose two.)

A. Establish an AWS Direct Connect connection between the on-premises network and the VPC.

B. Configure the JDBC connection to connect to Athena through Amazon API Gateway.

C. Configure the JDBC connection to use a gateway VPC endpoint for Amazon S3.

D. Configure the JDBC connection to use an interface VPC endpoint for Athena.

E. Deploy Athena within a private subnet.

A company is designing a support ticketing system for its employees. The company has a flattened LDAP dataset that contains employee data. The data includes ticket categories that the employees can access, relevant ticket metadata stored in Amazon S3, and the business unit of each employee.

The company uses Amazon QuickSight to visualize the data. The company needs an automated solution to apply row-level data restriction within the QuickSight group for each business unit. The solution must grant access to an employee when an employee is added to a business unit and must deny access to an employee when an employee is removed from a business unit.

Which solution will meet these requirements?

A. Load the dataset into SPICE from Amazon S3. Create a SPICE query that contains the dataset rules for row-level security. Upload separate .csv files to Amazon S3 for adding and removing users from a group. Apply the permissions dataset on the existing QuickSight users. Create an AWS Lambda function that will run periodically to refresh the direct query cache based on the changes to the .csv file.

B. Load the dataset into SPICE from Amazon S3. Create an AWS Lambda function that will run each time the direct query cache is refreshed. Configure the Lambda function to apply a permissions file to the dataset that is loaded into SPICE. Configure the addition and removal of groups and users by creating a QuickSight IAM policy.

C. Load the dataset into SPICE from Amazon S3. Apply a permissions file to the dataset to dictate which group has access to the dataset. Upload separate .csv files to Amazon S3 for adding and removing groups and users under the path that QuickSight is reading from. Create an AWS Lambda function that will run when a particular object is uploaded to Amazon S3. Configure the Lambda function to make API calls to QuickSight to add or remove users or a group.

D. Move the data from Amazon S3 into Amazon Redshift. Load the dataset into SPICE from Amazon Redshift. Create an AWS Lambda function that will run each time the direct query cache is refreshed. Configure the Lambda function to apply a permissions file to the dataset that is loaded into SPICE.

A workforce management company has built business intelligence dashboards in Amazon QuickSight Enterprise edition to help the company understand staffing behavior for its customers. The company stores data for these dashboards in Amazon S3 and performs queries by using Amazon Athena. The company has millions of records from many years of data.

A data analytics specialist observes sudden changes in overall staffing revenue in one of the dashboards. The company thinks that these sudden changes are because of outliers in data for some customers. The data analytics specialist must implement a solution to explain and identify the primary reasons for these changes.

Which solution will meet these requirements with the LEAST development effort?

A. Add a box plot visual type in QuickSight to compare staffing revenue by customer.

B. Use the anomaly detection and contribution analysis feature in QuickSight.

C. Create a custom SQL script in Athena. Invoke an anomaly detection machine learning model.

D. Use S3 analytics storage class analysis to detect anomalies for data written to Amazon S3.

A company stores financial performance records of its various portfolios in CSV format in Amazon S3. A data analytics specialist needs to make this data accessible in the AWS Glue Data Catalog for the company's data analysts. The data analytics specialist creates an AWS Glue crawler in the AWS Glue console.

What must the data analytics specialist do next to make the data accessible for the data analysts?

A. Create an IAM role that includes the AWSGlueExecutionRole policy. Associate the role with the crawler. Specify the S3 path of the source data as the crawler's data store. Create a schedule to run the crawler. Point to the S3 path for the output.

B. Create an IAM role that includes the AWSGlueServiceRole policy. Associate the role with the crawler. Specify the S3 path of the source data as the crawler's data store. Create a schedule to run the crawler. Specify a database name for the output.

C. Create an IAM role that includes the AWSGlueExecutionRole policy. Associate the role with the crawler. Specify the S3 path of the source data as the crawler's data store. Allocate data processing units (DPUs) to run the crawler. Specify a database name for the output.

D. Create an IAM role that includes the AWSGlueServiceRole policy. Associate the role with the crawler. Specify the S3 path of the source data as the crawler's data store. Allocate data processing units (DPUs) to run the crawler. Point to the S3 path for the output.

A company is running Apache Spark on an Amazon EMR cluster. The Spark job writes data to an Amazon S3 bucket and generates a large number of PUT requests. The number of objects has increased over time.

After a recent increase in traffic, the Spark job started failing and returned an HTTP 503 Slow Down AmazonS3Exception error.

Which combination of actions will resolve this error? (Choose two.)

A. Increase the number of S3 key prefixes for the S3 bucket.

B. Increase the EMR File System (EMRFS) retry limit.

C. Disable dynamic partition pruning in the Spark configuration for the cluster.

D. Increase the repartitioning number for the Spark job.

E. Increase the executor memory size on Spark.

A company wants to use automatic machine learning (ML) to create and visualize forecasts of complex scenarios and trends. Which solution will meet these requirements with the LEAST management overhead?

A. Use an AWS Glue ML job to transform the data and create forecasts. Use Amazon QuickSight to visualize the data.

B. Use Amazon QuickSight to visualize the data. Use ML-powered forecasting in QuickSight to create forecasts.

C. Use a prebuilt ML AMI from the AWS Marketplace to create forecasts. Use Amazon QuickSight to visualize the data.

D. Use Amazon SageMaker inference pipelines to create and update forecasts. Use Amazon QuickSight to visualize the combined data.

A company stores transaction data in an Amazon Aurora PostgreSQL DB cluster. Some of the data is sensitive. A data analytics specialist must implement a solution to classify the data in the database and create a report. Which combination of steps will meet this requirement with the LEAST development effort? (Choose two.)

A. Create an Amazon S3 bucket. Export an Aurora DB cluster snapshot to the bucket.

B. Create an Amazon S3 bucket. Create an AWS Lambda function to run Amazon Athena federated queries on the database and to store the output as S3 objects in Apache Parquet format.

C. Create an Amazon S3 bucket. Create an AWS Lambda function to run Amazon Athena federated queries on the database and to store the output as S3 objects in CSV format.

D. Create an AWS Lambda function to analyze the bucket contents and create a report.

E. Create an Amazon Macie job to analyze the bucket contents and create a report.

A company's data science team is designing a shared dataset repository on a Windows server. The data repository will store a large amount of training data that the data science team commonly uses in its machine learning models. The data

scientists create a random number of new datasets each day.

The company needs a solution that provides persistent, scalable file storage and high levels of throughput and IOPS. The solution also must be highly available and must integrate with Active Directory for access control.

Which solution will meet these requirements with the LEAST development effort?

A. Store datasets as files in an Amazon EMR cluster. Set the Active Directory domain for authentication.

B. Store datasets as files in Amazon FSx for Windows File Server. Set the Active Directory domain for authentication.

C. Store datasets as tables in a multi-node Amazon Redshift cluster. Set the Active Directory domain for authentication.

D. Store datasets as global tables in Amazon DynamoDB. Build an application to integrate authentication with the Active Directory domain.