Amazon MLS-C01 dumps - 100% Pass Guarantee!

Vendor: Amazon

Certifications: Amazon Certifications

Exam Name: AWS Certified Machine Learning - Specialty (MLS-C01)

Exam Code: MLS-C01

Total Questions: 394 Q&As ( View Details)

Last Updated: Mar 17, 2025

Note: Product instant download. Please sign in and click My account to download your product.

- Q&As Identical to the VCE Product

- Windows, Mac, Linux, Mobile Phone

- Printable PDF without Watermark

- Instant Download Access

- Download Free PDF Demo

- Includes 365 Days of Free Updates

VCE

- Q&As Identical to the PDF Product

- Windows Only

- Simulates a Real Exam Environment

- Review Test History and Performance

- Instant Download Access

- Includes 365 Days of Free Updates

Amazon MLS-C01 Last Month Results

99.4% Pass Rate

99.4% Pass Rate 365 Days Free Update

365 Days Free Update Verified By Professional IT Experts

Verified By Professional IT Experts 24/7 Live Support

24/7 Live Support Instant Download PDF&VCE

Instant Download PDF&VCE 3 Days Preparation Before Test

3 Days Preparation Before Test 18 Years Experience

18 Years Experience 6000+ IT Exam Dumps

6000+ IT Exam Dumps 100% Safe Shopping Experience

100% Safe Shopping Experience

MLS-C01 Q&A's Detail

| Exam Code: | MLS-C01 |

| Total Questions: | 394 |

| Single & Multiple Choice | 394 |

CertBus Has the Latest MLS-C01 Exam Dumps in Both PDF and VCE Format

- Amazon_certbus_MLS-C01_by_Isilon_326.pdf

- 227.46 KB

- Amazon_certbus_MLS-C01_by_certtaker_324.pdf

- 227.27 KB

- Amazon_certbus_MLS-C01_by_vaji_373.pdf

- 248.64 KB

- Amazon_certbus_MLS-C01_by_serafino000_373.pdf

- 230.78 KB

- Amazon_certbus_MLS-C01_by_Mudassir_323.pdf

- 226.63 KB

- Amazon_certbus_MLS-C01_by_suthakaran_352.pdf

- 226.94 KB

MLS-C01 Online Practice Questions and Answers

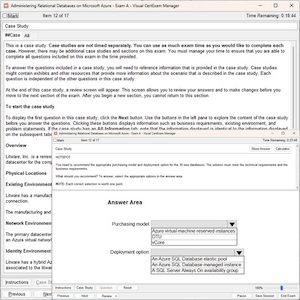

An aircraft engine manufacturing company is measuring 200 performance metrics in a time-series. Engineers want to detect critical manufacturing defects in near-real time during testing. All of the data needs to be stored for offline analysis.

What approach would be the MOST effective to perform near-real time defect detection?

A. Use AWS IoT Analytics for ingestion, storage, and further analysis. Use Jupyter notebooks from within AWS IoT Analytics to carry out analysis for anomalies.

B. Use Amazon S3 for ingestion, storage, and further analysis. Use an Amazon EMR cluster to carry out Apache Spark ML k-means clustering to determine anomalies.

C. Use Amazon S3 for ingestion, storage, and further analysis. Use the Amazon SageMaker Random Cut Forest (RCF) algorithm to determine anomalies.

D. Use Amazon Kinesis Data Firehose for ingestion and Amazon Kinesis Data Analytics Random Cut Forest (RCF) to perform anomaly detection. Use Kinesis Data Firehose to store data in Amazon S3 for further analysis.

A company is using Amazon Textract to extract textual data from thousands of scanned text-heavy legal documents daily. The company uses this information to process loan applications automatically. Some of the documents fail business validation and are returned to human reviewers, who investigate the errors. This activity increases the time to process the loan applications.

What should the company do to reduce the processing time of loan applications?

A. Configure Amazon Textract to route low-confidence predictions to Amazon SageMaker Ground Truth. Perform a manual review on those words before performing a business validation.

B. Use an Amazon Textract synchronous operation instead of an asynchronous operation.

C. Configure Amazon Textract to route low-confidence predictions to Amazon Augmented AI (Amazon A2I). Perform a manual review on those words before performing a business validation.

D. Use Amazon Rekognition's feature to detect text in an image to extract the data from scanned images. Use this information to process the loan applications.

A data engineer at a bank is evaluating a new tabular dataset that includes customer data. The data engineer will use the customer data to create a new model to predict customer behavior. After creating a correlation matrix for the variables, the data engineer notices that many of the 100 features are highly correlated with each other.

Which steps should the data engineer take to address this issue? (Choose two.)

A. Use a linear-based algorithm to train the model.

B. Apply principal component analysis (PCA).

C. Remove a portion of highly correlated features from the dataset.

D. Apply min-max feature scaling to the dataset.

E. Apply one-hot encoding category-based variables.

A data scientist is building a forecasting model for a retail company by using the most recent 5 years of sales records that are stored in a data warehouse. The dataset contains sales records for each of the company's stores across five commercial regions The data scientist creates a working dataset with StorelD. Region. Date, and Sales Amount as columns. The data scientist wants to analyze yearly average sales for each region. The scientist also wants to compare how each region performed compared to average sales across all commercial regions.

Which visualization will help the data scientist better understand the data trend?

A. Create an aggregated dataset by using the Pandas GroupBy function to get average sales for each year for each store. Create a bar plot, faceted by year, of average sales for each store. Add an extra bar in each facet to represent average sales.

B. Create an aggregated dataset by using the Pandas GroupBy function to get average sales for each year for each store. Create a bar plot, colored by region and faceted by year, of average sales for each store. Add a horizontal line in each facet to represent average sales.

C. Create an aggregated dataset by using the Pandas GroupBy function to get average sales for each year for each region Create a bar plot of average sales for each region. Add an extra bar in each facet to represent average sales.

D. Create an aggregated dataset by using the Pandas GroupBy function to get average sales for each year for each region Create a bar plot, faceted by year, of average sales for each region Add a horizontal line in each facet to represent average sales.

A data scientist obtains a tabular dataset that contains 150 correlated features with different ranges to build a regression model. The data scientist needs to achieve more efficient model training by implementing a solution that minimizes impact on the model's performance. The data scientist decides to perform a principal component analysis (PCA) preprocessing step to reduce the number of features to a smaller set of independent features before the data scientist uses the new features in the regression model.

Which preprocessing step will meet these requirements?

A. Use the Amazon SageMaker built-in algorithm for PCA on the dataset to transform the data

B. Load the data into Amazon SageMaker Data Wrangler. Scale the data with a Min Max Scaler transformation step Use the SageMaker built-in algorithm for PCA on the scaled dataset to transform the data.

C. Reduce the dimensionality of the dataset by removing the features that have the highest correlation Load the data into Amazon SageMaker Data Wrangler Perform a Standard Scaler transformation step to scale the data Use the SageMaker built-in algorithm for PCA on the scaled dataset to transform the data

D. Reduce the dimensionality of the dataset by removing the features that have the lowest correlation. Load the data into Amazon SageMaker Data Wrangler. Perform a Min Max Scaler transformation step to scale the data. Use the SageMaker built-in algorithm for PCA on the scaled dataset to transform the data.

Add Comments

Success Stories

- Egypt

- Walls

- Mar 22, 2025

- Rating: 4.2 / 5.0

![]()

I love this dumps. It really helpful and convenient. Recommend strongly.

- South Africa

- Noah

- Mar 20, 2025

- Rating: 5.0 / 5.0

![]()

HIGHLY recommend. Each question and answer is centered around something that must be known for this exam. Each answer is clear, concise, and accurate. They have explanations for the important questions, too. I suggest to give all explanations to all questions. That would be more helpful.

- United States

- Wingate

- Mar 20, 2025

- Rating: 5.0 / 5.0

![]()

I took approximately a month and a half to study for the MLS-C01 I started off with this dumps. I read it from question to question as they suggested "Go through all the questions and get understanding about the knowledge points then you will surely pass the exam easily." The dumps is a good supplement to a layered study approach.

- Greece

- Lara

- Mar 20, 2025

- Rating: 5.0 / 5.0

![]()

Dump is valid. Thanks for all.

- London

- Kelly

- Mar 19, 2025

- Rating: 5.0 / 5.0

This resource was colossally helpful during my MLS-C01 studies. The practice tests are decent, and the downloadable content was great. I used this and two other textbooks as my primary resources, and I passed! Thank you!

- New Zealand

- Ziaul huque

- Mar 18, 2025

- Rating: 4.7 / 5.0

![]()

This study material is very useful and effective, if you have not much time to prepare for your exam, this study material is your best choice.

- United States

- Nike

- Mar 18, 2025

- Rating: 4.3 / 5.0

![]()

this dumps is really good and useful, i have passed the exam successfully. i will share with my friend

- Sri Lanka

- Mussy

- Mar 16, 2025

- Rating: 4.4 / 5.0

![]()

this dumps is useful and convenient, i think it will be your best choice. believe on it .

- Vancouver

- Morris

- Mar 13, 2025

- Rating: 5.0 / 5.0

Confirmed valid because I just passed my exam. I got all questions from this dumps. Their dumps are really update and accurate. It will be your first choice if you do not have enough time to prepare for your exam. It's enough to use this dumps only. But be sure you understand the answers of the questions but not only memorize the options "mechanically".

- China

- Perry

- Mar 13, 2025

- Rating: 5.0 / 5.0

![]()

Hello, guys. i have passed the exam successfully in the morning,thanks you very much.

Amazon MLS-C01 exam official information: This credential helps organizations identify and develop talent with critical skills for implementing cloud initiatives. Earning AWS Certified Machine Learning - Specialty validates expertise in building, training, tuning, and deploying machine learning (ML) models on AWS.

Printable PDF

Printable PDF