Databricks DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK dumps - 100% Pass Guarantee!

Vendor: Databricks

Certifications: Databricks Certifications

Exam Name: Databricks Certified Associate Developer for Apache Spark 3.0

Exam Code: DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK

Total Questions: 180 Q&As

Last Updated: Mar 14, 2025

Note: Product instant download. Please sign in and click My account to download your product.

- Q&As Identical to the VCE Product

- Windows, Mac, Linux, Mobile Phone

- Printable PDF without Watermark

- Instant Download Access

- Download Free PDF Demo

- Includes 365 Days of Free Updates

VCE

- Q&As Identical to the PDF Product

- Windows Only

- Simulates a Real Exam Environment

- Review Test History and Performance

- Instant Download Access

- Includes 365 Days of Free Updates

Databricks DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK Last Month Results

99.7% Pass Rate

99.7% Pass Rate 365 Days Free Update

365 Days Free Update Verified By Professional IT Experts

Verified By Professional IT Experts 24/7 Live Support

24/7 Live Support Instant Download PDF&VCE

Instant Download PDF&VCE 3 Days Preparation Before Test

3 Days Preparation Before Test 18 Years Experience

18 Years Experience 6000+ IT Exam Dumps

6000+ IT Exam Dumps 100% Safe Shopping Experience

100% Safe Shopping Experience

DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK Q&A's Detail

| Exam Code: | DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK |

| Total Questions: | 180 |

CertBus Has the Latest DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK Exam Dumps in Both PDF and VCE Format

- Databricks_certbus_DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK_by_Helio_169.pdf

- 241.49 KB

- Databricks_certbus_DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK_by_Chi_Pui_152.pdf

- 249.72 KB

- Databricks_certbus_DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK_by_Elmer_Fudd_145.pdf

- 253.56 KB

- Databricks_certbus_DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK_by_Abdulrahman_Binsumaidea_from_Yemen_144.pdf

- 246.17 KB

- Databricks_certbus_DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK_by_CCIE_New_145.pdf

- 248.68 KB

- Databricks_certbus_DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK_by_sonny_155.pdf

- 249.12 KB

DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK Online Practice Questions and Answers

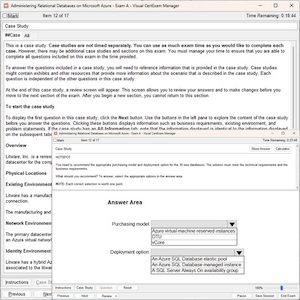

The code block displayed below contains an error. The code block is intended to write DataFrame transactionsDf to disk as a parquet file in location /FileStore/transactions_split, using column storeId as key for partitioning. Find the error.

Code block:

transactionsDf.write.format("parquet").partitionOn("storeId").save("/FileStore/transactions_s plit")A.

A. The format("parquet") expression is inappropriate to use here, "parquet" should be passed as first argument to the save() operator and "/FileStore/transactions_split" as the second argument.

B. Partitioning data by storeId is possible with the partitionBy expression, so partitionOn should be replaced by partitionBy.

C. Partitioning data by storeId is possible with the bucketBy expression, so partitionOn should be replaced by bucketBy.

D. partitionOn("storeId") should be called before the write operation.

E. The format("parquet") expression should be removed and instead, the information should be added to the write expression like so: write("parquet").

The code block displayed below contains multiple errors. The code block should remove column transactionDate from DataFrame transactionsDf and add a column transactionTimestamp in which

dates that are expressed as strings in column transactionDate of DataFrame transactionsDf are converted into unix timestamps. Find the errors.

Sample of DataFrame transactionsDf:

1.+-------------+---------+-----+-------+---------+----+----------------+

2.|transactionId|predError|value|storeId|productId| f| transactionDate| 3.+-------------+---------+-----+-------+---------+----+----------------+

4.| 1| 3| 4| 25| 1|null|2020-04-26 15:35|

5.| 2| 6| 7| 2| 2|null|2020-04-13 22:01|

6.| 3| 3| null| 25| 3|null|2020-04-02 10:53|

7.+-------------+---------+-----+-------+---------+----+----------------+

Code block:

1.transactionsDf = transactionsDf.drop("transactionDate")

2.transactionsDf["transactionTimestamp"] = unix_timestamp("transactionDate", "yyyy-MM- dd")

A. Column transactionDate should be dropped after transactionTimestamp has been written. The string indicating the date format should be adjusted. The withColumn operator should be used instead of the existing column assignment. Operator to_unixtime() should be used instead of unix_timestamp().

B. Column transactionDate should be dropped after transactionTimestamp has been written. The withColumn operator should be used instead of the existing column assignment. Column transactionDate should be wrapped in a col() operator.

C. Column transactionDate should be wrapped in a col() operator.

D. The string indicating the date format should be adjusted. The withColumnReplaced operator should be used instead of the drop and assign pattern in the code block to replace column transactionDate with the new column transactionTimestamp.

E. Column transactionDate should be dropped after transactionTimestamp has been written. The string indicating the date format should be adjusted. The withColumn operator should be used instead of the existing column assignment.

Which of the following describes a shuffle?

A. A shuffle is a process that is executed during a broadcast hash join.

B. A shuffle is a process that compares data across executors.

C. A shuffle is a process that compares data across partitions.

D. A shuffle is a Spark operation that results from DataFrame.coalesce().

E. A shuffle is a process that allocates partitions to executors.

Which of the following DataFrame methods is classified as a transformation?

A. DataFrame.count()

B. DataFrame.show()

C. DataFrame.select()

D. DataFrame.foreach()

E. DataFrame.first()

Which of the following code blocks performs an inner join between DataFrame itemsDf and DataFrame transactionsDf, using columns itemId and transactionId as join keys, respectively?

A. itemsDf.join(transactionsDf, "inner", itemsDf.itemId == transactionsDf.transactionId)

B. itemsDf.join(transactionsDf, itemId == transactionId)

C. itemsDf.join(transactionsDf, itemsDf.itemId == transactionsDf.transactionId, "inner")

D. itemsDf.join(transactionsDf, "itemsDf.itemId == transactionsDf.transactionId", "inner")

E. itemsDf.join(transactionsDf, col(itemsDf.itemId) == col(transactionsDf.transactionId))

Add Comments

Success Stories

- United States

- John

- Mar 19, 2025

- Rating: 5.0 / 5.0

![]()

I signed up for the exam and ordered dumps from this site. I never attended any bootcamp or classes geared to exam or material preparation. However, I was shocked to find all the time, money and energy people spent preparing to take this test. Honestly, it started to make me nervous, however, it was too late to turn back. I just bought this and read it in 6-days, and I took the exam on the 7th day. That was enough. Just go through the dumps and take the test.

- United States

- Lychee

- Mar 18, 2025

- Rating: 4.4 / 5.0

![]()

Pass 1000/1000, this dumps is still valid. thanks all.

- United States

- Talon

- Mar 18, 2025

- Rating: 4.3 / 5.0

![]()

Still valid!! 97%

- India

- zuher

- Mar 15, 2025

- Rating: 4.7 / 5.0

![]()

thanks for the advice. I passed my exam today! All the questions are from your dumps. Great job.

- United States

- Va

- Mar 15, 2025

- Rating: 4.1 / 5.0

![]()

Not take the exam yet. But i feel more and more confident with my exam by using this dumps. Now I am writing my exam on coming Saturday. I believe I will pass.

- Greece

- Rhys

- Mar 15, 2025

- Rating: 5.0 / 5.0

![]()

update quickly and be rich in content, great dumps.

- Saudi Arabia

- Quincy

- Mar 15, 2025

- Rating: 4.5 / 5.0

![]()

In the morning i received the good news that I have passed the exam with good marks. I'm so happy for that. Thanks for the help of this material.

- Ontario

- Cindy

- Mar 15, 2025

- Rating: 5.0 / 5.0

Very well written material. The questions are literally designed to help ensure good study habits and build crucial skills needed to pass the exams and apply skills learned also. I practice my knowledge after I learned my courses! The dumps deserves 5 stars. The labs are also included. I would suggest looking workbook or take courses. Combined with those you'll be able to get more than just the lite versions of the labs I suspect.

- United States

- KP

- Mar 13, 2025

- Rating: 5.0 / 5.0

![]()

Very easy read. Bought the dumps a little over a month ago, read this question by question, attend to an online course and passed the CISSP exam last Thursday. Did not use any other book in my study.

- China

- Perry

- Mar 13, 2025

- Rating: 5.0 / 5.0

![]()

Hello, guys. i have passed the exam successfully in the morning,thanks you very much.

Databricks DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK exam official information: The Databricks Certified Associate Developer for Apache Spark certification exam assesses the understanding of the Spark DataFrame API and the ability to apply the Spark DataFrame API to complete basic data manipulation tasks within a Spark session.

Printable PDF

Printable PDF