Hortonworks HADOOP-PR000007 dumps - 100% Pass Guarantee!

Vendor: Hortonworks

Certifications: HCAHD

Exam Name: Hortonworks Certified Apache Hadoop 2.0 Developer (Pig and Hive Developer)

Exam Code: HADOOP-PR000007

Total Questions: 108 Q&As ( View Details)

Last Updated: Mar 18, 2025

Note: Product instant download. Please sign in and click My account to download your product.

- Q&As Identical to the VCE Product

- Windows, Mac, Linux, Mobile Phone

- Printable PDF without Watermark

- Instant Download Access

- Download Free PDF Demo

- Includes 365 Days of Free Updates

VCE

- Q&As Identical to the PDF Product

- Windows Only

- Simulates a Real Exam Environment

- Review Test History and Performance

- Instant Download Access

- Includes 365 Days of Free Updates

Hortonworks HADOOP-PR000007 Last Month Results

99.2% Pass Rate

99.2% Pass Rate 365 Days Free Update

365 Days Free Update Verified By Professional IT Experts

Verified By Professional IT Experts 24/7 Live Support

24/7 Live Support Instant Download PDF&VCE

Instant Download PDF&VCE 3 Days Preparation Before Test

3 Days Preparation Before Test 18 Years Experience

18 Years Experience 6000+ IT Exam Dumps

6000+ IT Exam Dumps 100% Safe Shopping Experience

100% Safe Shopping Experience

HADOOP-PR000007 Q&A's Detail

| Exam Code: | HADOOP-PR000007 |

| Total Questions: | 108 |

| Single & Multiple Choice | 108 |

CertBus Has the Latest HADOOP-PR000007 Exam Dumps in Both PDF and VCE Format

- Hortonworks_certbus_HADOOP-PR000007_by_Brianna_96.pdf

- 234.43 KB

- Hortonworks_certbus_HADOOP-PR000007_by_fouad_99.pdf

- 230.48 KB

- Hortonworks_certbus_HADOOP-PR000007_by_Jade_86.pdf

- 226.41 KB

- Hortonworks_certbus_HADOOP-PR000007_by_M7mmd_90.pdf

- 237.31 KB

- Hortonworks_certbus_HADOOP-PR000007_by_voterobformayor_104.pdf

- 226.75 KB

- Hortonworks_certbus_HADOOP-PR000007_by_Ziaul_94.pdf

- 249.86 KB

HADOOP-PR000007 Online Practice Questions and Answers

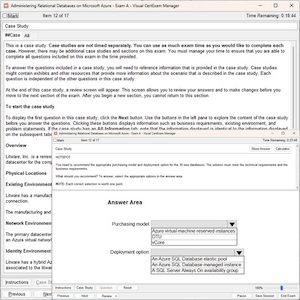

Given a directory of files with the following structure: line number, tab character, string: Example: 1abialkjfjkaoasdfjksdlkjhqweroij 2kadfjhuwqounahagtnbvaswslmnbfgy 3kjfteiomndscxeqalkzhtopedkfsikj You want to send each line as one record to your Mapper. Which InputFormat should you use to complete

the line: conf.setInputFormat (____.class) ; ?

A. SequenceFileAsTextInputFormat

B. SequenceFileInputFormat

C. KeyValueFileInputFormat

D. BDBInputFormat

What does Pig provide to the overall Hadoop solution?

A. Legacy language Integration with MapReduce framework

B. Simple scripting language for writing MapReduce programs

C. Database table and storage management services

D. C++ interface to MapReduce and data warehouse infrastructure

Which Hadoop component is responsible for managing the distributed file system metadata?

A. NameNode

B. Metanode

C. DataNode

D. NameSpaceManager

You have user profile records in your OLPT database, that you want to join with web logs you have already ingested into the Hadoop file system. How will you obtain these user records?

A. HDFS command

B. Pig LOAD command

C. Sqoop import

D. Hive LOAD DATA command

E. Ingest with Flume agents

F. Ingest with Hadoop Streaming

Which project gives you a distributed, Scalable, data store that allows you random, realtime read/write access to hundreds of terabytes of data?

A. HBase

B. Hue

C. Pig

D. Hive

E. Oozie

F. Flume

G. Sqoop

Add Comments

Success Stories

- India

- Abbie

- Mar 19, 2025

- Rating: 4.5 / 5.0

![]()

I passed my exam this morning. I prepared with this dumps two weeks ago. This dumps is very valid. All the questions were in my exam. I still got 2 new questions but luckily they are easy for me. Thanks for your help. I will recommend you to everyone I know.

- Singapore

- Teressa

- Mar 19, 2025

- Rating: 4.2 / 5.0

![]()

Wonderful dumps. I really appreciated this dumps with so many new questions and update so quickly. Recommend strongly.

- United States

- TK

- Mar 17, 2025

- Rating: 5.0 / 5.0

![]()

I passed the exam on my first try using this. Really recommend using textbooks or study guides before you practice the exam questions. Depending on your background, this should be the only resource that you'll need for exam HADOOP-PR000007.

- United States

- Jimmy

- Mar 16, 2025

- Rating: 5.0 / 5.0

![]()

Thank you for providing this very accurate exam dumps! There are great hints throughout your material that apply to studying any new subject. I agree completely about learning memorization tricks. One of my other tricks is to remember the content of the correct option.

- Bangladesh

- Orlando

- Mar 16, 2025

- Rating: 4.1 / 5.0

![]()

Many questions are from the dumps but few question changed. Need to be attention.

- New York

- Terry

- Mar 14, 2025

- Rating: 5.0 / 5.0

Pass the exam easily with there dumps! The questions are valid and correct. I got no new question in my actual exam. I prepare for my exam only with this dumps.

- Sri Lanka

- Miltenberger

- Mar 14, 2025

- Rating: 4.8 / 5.0

![]()

passed today. I think it is very useful and enough for your exam, so trust on it and you will achieve success.

- India

- Terrell

- Mar 14, 2025

- Rating: 4.5 / 5.0

![]()

Valid. Passed Today.....So happy, I will recommend it to my friends.

- Sweden

- zera

- Mar 13, 2025

- Rating: 4.1 / 5.0

![]()

Passed today with the HADOOP-PR000007 braindump. There are only 3-4 new. Handle without any problems. Thank you all!

- Pakistan

- zia

- Mar 13, 2025

- Rating: 4.1 / 5.0

![]()

I took my exam yesterday and passed. Questions are valid. Customer support was great. Thanks for your help.

Printable PDF

Printable PDF