CCA-505 Online Practice Questions and Answers

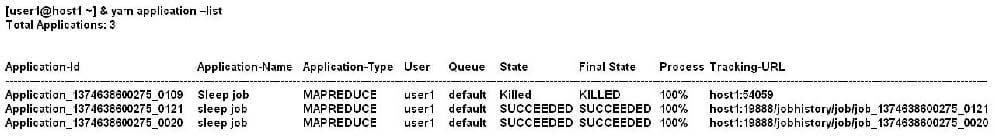

Given:

You want to clean up this list by removing jobs where the state is KILLED. What command you enter?

A. Yarn application kill application_1374638600275_0109

B. Yarn rmadmin refreshQueue

C. Yarn application refreshJobHistory

D. Yarn rmadmin kill application_1374638600275_0109

Which Yarn daemon or service monitors a Container's per-application resource usage (e.g, memory, CPU)?

A. NodeManager

B. ApplicationMaster

C. ApplicationManagerService

D. ResourceManager

Which two are Features of Hadoop's rack topology?

A. Configuration of rack awareness is accomplished using a configuration file. You cannot use a rack topology script.

B. Even for small clusters on a single rack, configuring rack awareness will improve performance.

C. Rack location is considered in the HDFS block placement policy

D. HDFS is rack aware but MapReduce daemons are not

E. Hadoop gives preference to Intra rack data transfer in order to conserve bandwidth

You are running a Hadoop cluster with MapReduce version 2 (MRv2) on YARN. You consistently see that MapReduce map tasks on your cluster are running slowly because of excessive garbage collection of JVM, how do you increase JVM heap property to 3GB to optimize performance?

A. Yarn.application.child.java.opts-Xax3072m

B. Yarn.application.child.java.opts=-3072m

C. Mapreduce.map.java.opts=-Xmx3072m

D. Mapreduce.map.java.opts=-Xms3072m

You have a cluster running with the Fair Scheduler enabled. There are currently no jobs running on the cluster, and you submit a job A, so that only job A is running on the cluster. A while later, you submit Job B. now job A and Job B are running on the cluster at the same time. How will the Fair Scheduler handle these two jobs?

A. When job A gets submitted, it consumes all the tasks slots.

B. When job A gets submitted, it doesn't consume all the task slots

C. When job B gets submitted, Job A has to finish first, before job B can scheduled

D. When job B gets submitted, it will get assigned tasks, while Job A continue to run with fewer tasks.

Your Hadoop cluster contains nodes in three racks. You have NOT configured the dfs.hosts property in the NameNode's configuration file. What results?

A. No new nodes can be added to the cluster until you specify them in the dfs.hosts file

B. Presented with a blank dfs.hosts property, the NameNode will permit DatNode specified in mapred.hosts to join the cluster

C. Any machine running the DataNode daemon can immediately join the cluster

D. The NameNode will update the dfs.hosts property to include machine running DataNode daemon on the next NameNode reboot or with the command dfsadmin -refreshNodes

What processes must you do if you are running a Hadoop cluster with a single NameNode and six DataNodes, and you want to change a configuration parameter so that it affects all six DataNodes.

A. You must modify the configuration file on each of the six DataNode machines.

B. You must restart the NameNode daemon to apply the changes to the cluster

C. You must restart all six DatNode daemon to apply the changes to the cluste

D. You don't need to restart any daemon, as they will pick up changes automatically

E. You must modify the configuration files on the NameNode only. DataNodes read their configuration from the master nodes.

You are migrating a cluster from MapReduce version 1 (MRv1) to MapReduce version2 (MRv2) on YARN. To want to maintain your MRv1 TaskTracker slot capacities when you migrate. What should you do?

A. Configure yarn.applicationmaster.resource.memory-mb and yarn.applicationmaster.cpu- vcores so that ApplicationMaster container allocations match the capacity you require.

B. You don't need to configure or balance these properties in YARN as YARN dynamically balances resource management capabilities on your cluster

C. Configure yarn.nodemanager.resource.memory-mb and yarn.nodemanager.resource.cpu- vcores to match the capacity you require under YARN for each NodeManager

D. Configure mapred.tasktracker.map.tasks.maximum and mapred.tasktracker.reduce.tasks.maximum ub yarn.site.xml to match your cluster's configured capacity set by yarn.scheduler.minimum-allocation